Table of Contents

The Backstory: Too Much AWS in Our DNA

Like many teams, we started our cloud journey deeply tied to AWS. Every service we wrote had hardcoded aws-sdk-go or boto3 calls — whether it was S3 uploads, EventBridge publishing, or secret retrieval.

This was fine until leadership said:

👉“We’re moving workloads to GCP. How long will migration take?”

Suddenly, every AWS call in our codebase turned into a migration tax. We were rewriting logic instead of focusing on features.

As developers, we hate wasting time on boilerplate rewrites. So we asked: what if we never had to care whether the bucket was in AWS or GCP?

The “Aha!” Moment: Build Once, Run Anywhere

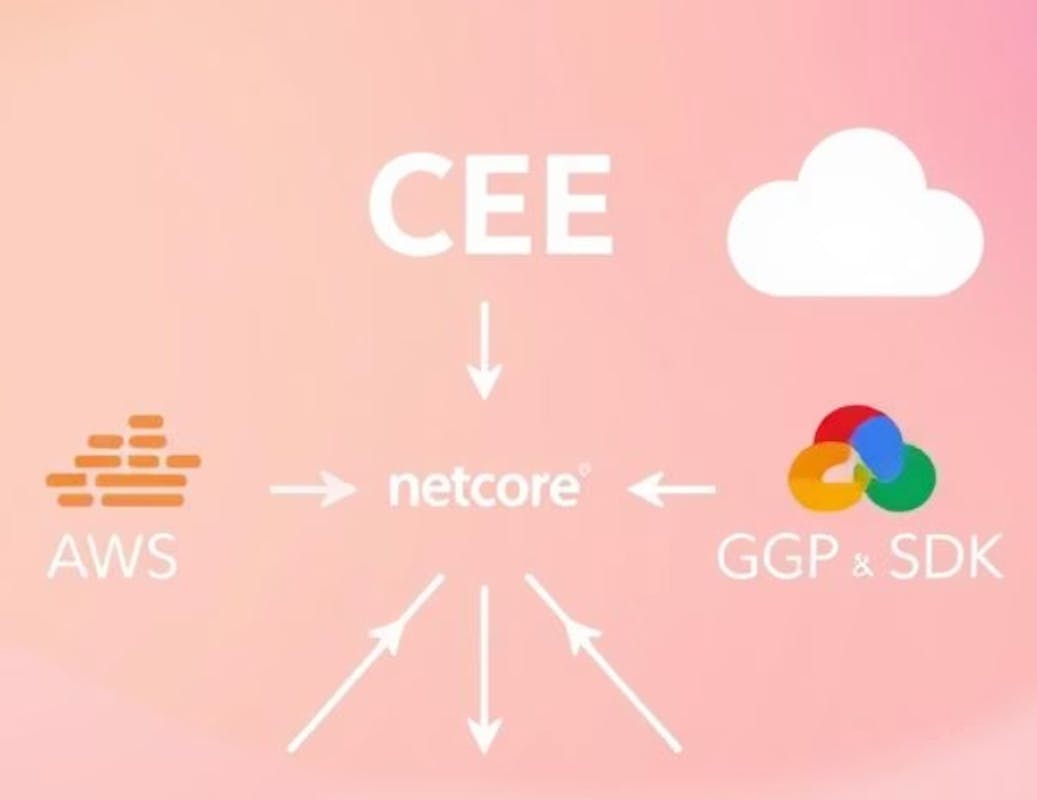

We realized we could wrap AWS and GCP SDKs behind a unified interface — one that speaks the same language no matter which cloud is underneath.

- One method for storage (upload, download, delete)

- One method for events (publish)

- One method for secrets (get_secret)

Switching clouds would just mean:

export CLOUD_PROVIDER=aws # or gcp

The application code wouldn’t change at all.That’s how the Netcore Cloud SDK was born.

The Real Challenge: Private SDK Distribution

Building the abstraction was only half the battle. The real fun started when we had to ship the SDK internally.

- For Go, the module needed to be pulled from a private GitLab repo.

- For Python, we wanted a clean pip install experience — again from a private GitLab PyPI registry.

And developers? We didn’t want them to jump through hoops. It had to feel just like using a public package

Solving It for Go

Go is strict about private modules. We cracked it with .netrc and GOPRIVATE:

# ~/.netrc machine gitlab.com

login <username> password <personal-access-token>

export GOPRIVATE=gitlab.com/netcorecloud/* go get gitlab.com/netcorecloud/cee/nc-cloud-sdk-go@latest

From there, you can import "gitlab.com/netcorecloud/cee/nc-cloud-sdk-go/cloud" and Go happily fetches from GitLab.

No hacks. No manual cloning. Just Go modules working as expected. ✅

Solving It for Python

Python had its own quirks. We used GitLab’s PyPI package registry.

Here’s what worked for us:

pip install nc-cloud-sdk-py \ --index-url https://<username>:<token>@gitlab.com/api/v4/projects/69710082/packages/pypi/simple

Or, even better, in requirements.txt:

--extra-index-url https://__token__:${GITLAB_TOKEN}@gitlab.com/api/v4/projects/69710082/packages/pypi/simple

nc-cloud-sdk-py==0.0.1Developers now just set GITLAB_TOKEN and run pip install -r requirements.txt.

Suddenly, our private SDK felt like a public library.

Writing Code That Doesn’t Care About the Cloud

Here’s where it gets fun. Same code. Two clouds.

Go Example

provider, _ := cloud.GetCloudProviderFactory(os.Getenv("CLOUD_PROVIDER"))

storageClient, _ := provider.StorageClient(&iface.S3GcsInput{

Context: context.Background(),

Bucket: "my-bucket",

Region: "us-west-2", // AWS

// ProjectId: "my-gcp-project", // GCP

})

storageClient.Upload("folder/myfile.txt", []byte("Hello Cloud!"))Python Example

from nc_cloud_sdk_py import CloudServiceFactory

import os

cloud_provider = os.getenv("CLOUD_PROVIDER", "aws")

cloud_service_factory = CloudServiceFactory(

cloud_provider=cloud_provider )

# Upload works on both S3 and GCS

with open("file.txt", "rb") as f:

cloud_service_factory.storage_service.upload("my-bucket","folder/file.txt", f.read())The only difference between AWS and GCP?

👉 An environment variable.

Debug Mode: Because Nothing Works First Try

Of course, cloud SDKs never work right the first time. That’s why we added debug mode:

In Python:

export NC_CLOUD_SDK_DEBUG=1

- Suddenly, you see logs of every API call, topic, payload, and response.

- In Go: just enable logging and watch SDK internals spill out.

Debug mode saved us countless hours during rollout.

The Payoff

What did we gain?

- Faster migrations: We flipped services from AWS to GCP in hours, not weeks.

- Happier developers: No more re-learning provider-specific quirks.

- Future-proofing: Adding another cloud is now an extension, not a rewrite.

And honestly, the best part?

Developers could focus on building features, not fighting SDK differences

What’s Next?

- Building a testing harness that simulates both AWS and GCP locally.

- Expanding to more cloud features and making the SDK robust

Final Thoughts

The Netcore Cloud SDK is one of those things you wish you had from day one. It’s not glamorous, but it quietly saves you time, sanity, and vendor headaches.

If you’re starting a project today, ask yourself:

👉 Do I want to tie my code to one cloud forever?

If the answer is no, an SDK-first approach will make your life a lot easier when — not if — cloud strategy shifts.